Competition Underway to Identify the Best Predictive Model of the Brain’s Visual Cortex

Running an experiment on a lab animal can be challenging—given the unpredictability that comes with studying a living creature as well as the costs associated with these experiments. But simulating them on the computer—a method referred to as “in silico”—does away with some of these challenges.

This is especially true in neuroscience given the complexity of the brain. To run in silico experiments, however, neuroscientists must develop accurate models of the brain based on which they can then reproduce activity.

This effort to collect data and use it to develop models has gained considerable traction in the last decade and today, there exist large-scale datasets that are used to create state-of-the art predictive models of the brain. And yet, even the most advanced models aren’t always able to fully predict and reproduce neural responses. What’s more, with so many existing models, there’s a need for standardized benchmarks that can help compare models and identify the best ones in the field.

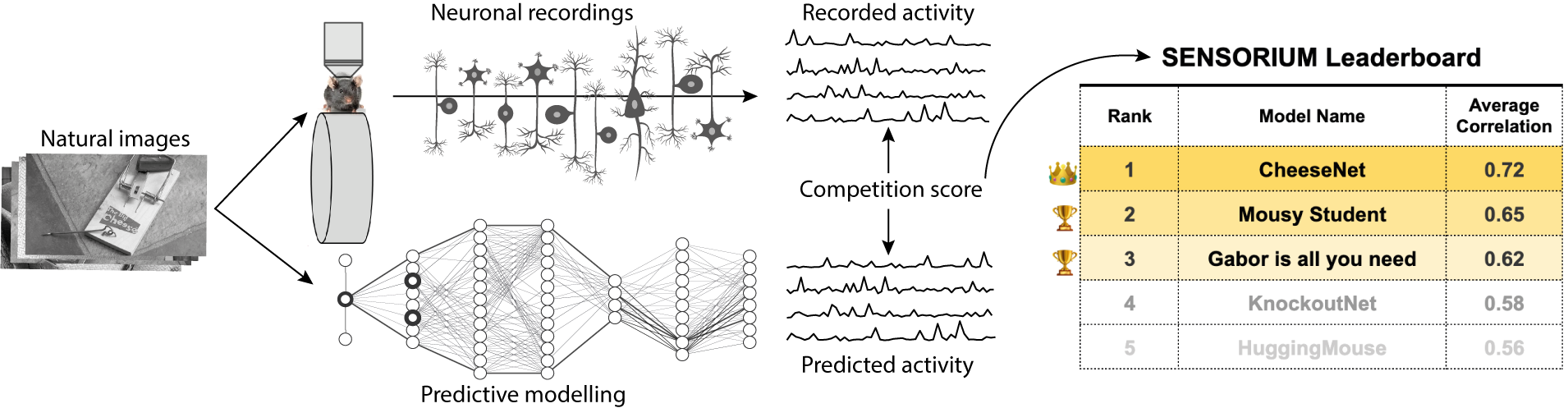

Enter Sensorium 2022—a competition designed to evaluate predictive models of the visual cortex in an effort to develop baseline models and establish standardized benchmarks in neural research. The competition—a collaborative effort led by researchers at the Baylor College of Medicine, the University of Tübingen, and the University of Göttingen—will also help define success metrics or definitive ways in which models are evaluated and scored.

The goal of the competition is to provide “a large dataset that the whole community of neuro-researchers can agree on,” says Konstantin Willeke, a graduate student at the University of Tübingen in Germany and one of the competition organizers.

As part of this effort, participants have access to publicly available datasets consisting of activity from more than 28,000 neurons from the primary visual cortex of seven different mice in response to thousands of images. Datasets also include numerous behavioral measurements such as running, pupil dilation, and eye movements.

Participants process this data using their models, which are then ranked based on how well the model simulates and predicts the responses of recorded neurons to images in the mouse’s visual cortex.

While benchmarks that focus on visual recognition already exist, this effort is the first of its kind that’s focused on predicting the activity of a large population of neurons in the primary visual cortex. What’s more, computational scientists can use this benchmark to train their models to better predict neural responses, which could enable them to develop new hypotheses in the future, in turn facilitating systems neuroscientists to test out these hypotheses in the subject itself.

“If we have a good very model, we can run exhaustive experiments in the models and make predictions that can be tested in vivo,” says Andreas Tolias, competition organizer and professor at the Baylor College of Medicine. “So, we would dramatically accelerate the progress of discovery by having this model.”

While initial results of the competition are in, official winners will be announced in mid-November. Creators of the top three winning models will provide their code as well as a summary of their work, which will be shared at the NeurIPS conference in December.